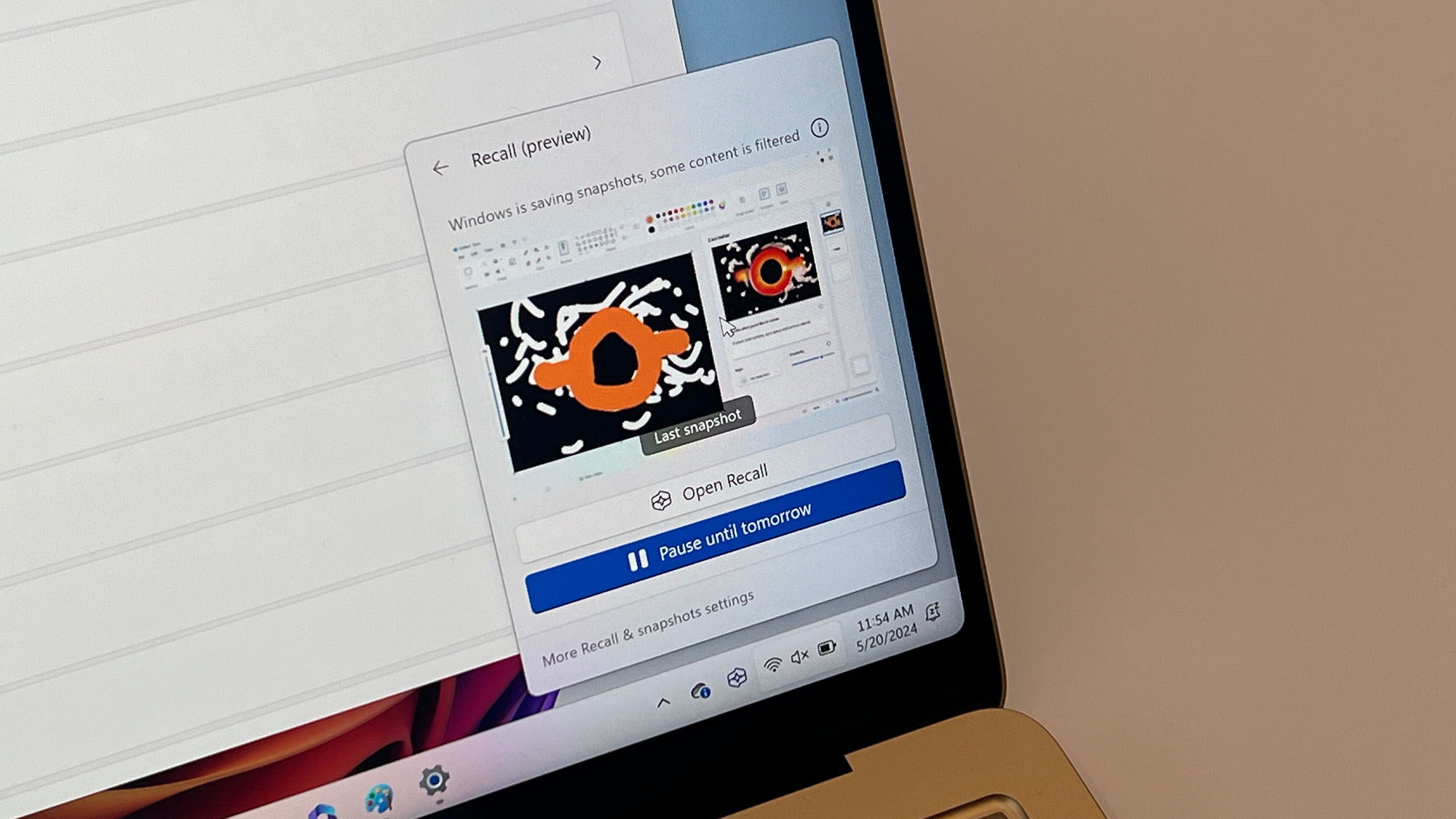

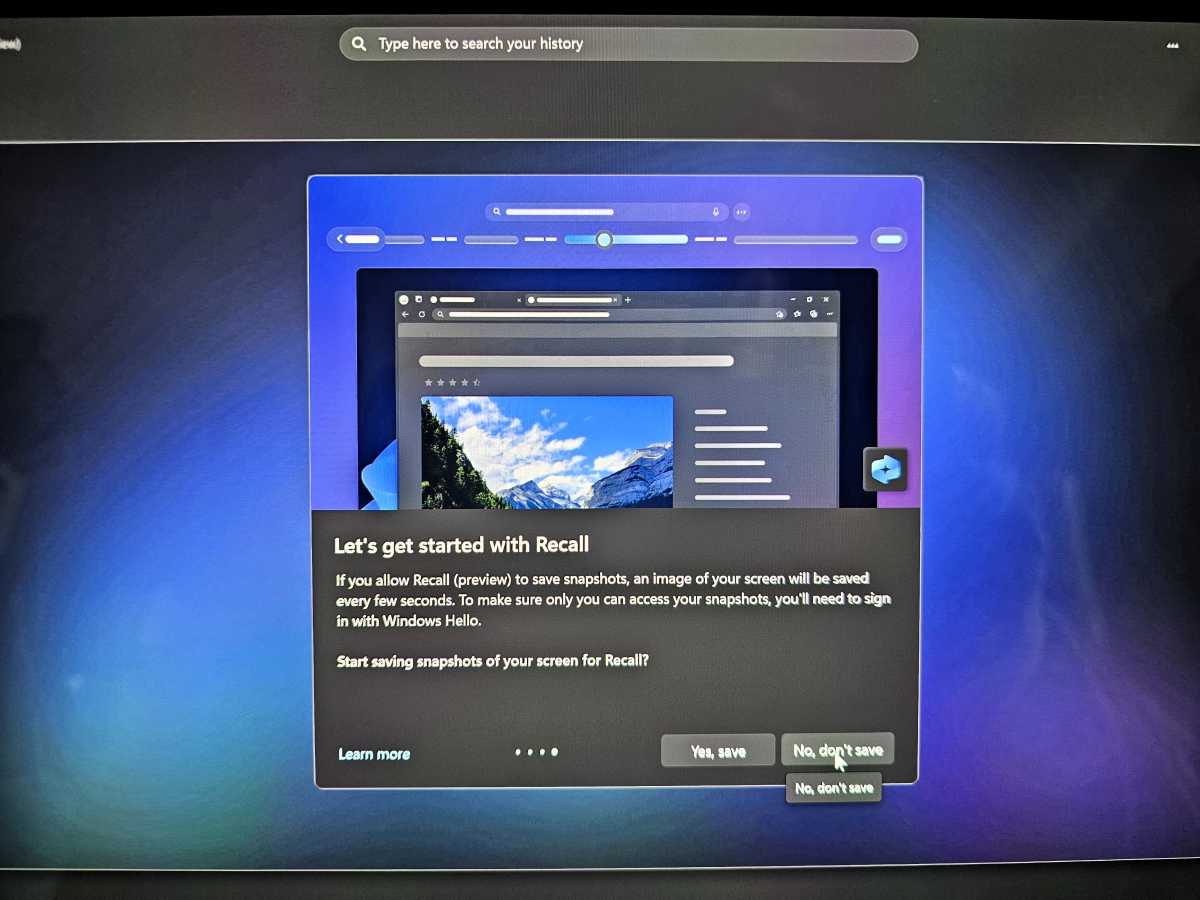

Since its initial announcement in May, Microsoft’s Copilot PC feature called Recall has been under scrutiny due to concerns about its handling of sensitive personal data. This AI-powered tool is designed to help users find anything they’ve seen or worked on previously, using a method that involves capturing screenshots of your on-screen activity. The tool aims to streamline the process of locating past documents, emails, websites, and other pieces of information, offering users a fast and convenient way to retrieve data they might have otherwise forgotten.

Here's ads banner inside a post

However, the rollout of Recall has not been without its troubles. After facing a temporary delay, Microsoft finally released Recall in a preview version in late November. Despite its initial setbacks and the company’s assurances regarding privacy, a recent real-world test has revealed serious issues concerning the feature’s ability to filter out sensitive information, such as credit card numbers and social security details. This raises significant questions about whether Microsoft’s AI search tool is safe to use and whether it truly lives up to the privacy expectations it claims.

A Troubling Test: How Recall Failed to Filter Sensitive Data

A recent test performed by Tom’s Hardware on Microsoft’s Recall feature found that, despite having a built-in “Filter sensitive information” setting, the tool still captured personal data that it was supposed to avoid. The test involved Tom’s Hardware Editor-in-Chief, Avram Piltch, who entered a credit card number along with a random username and password into a Windows Notepad document. To his surprise, Recall still managed to capture the sensitive information, even with the filter enabled. Piltch further tested the feature using a fake social security number in a PDF loan application accessed through Microsoft Edge. Again, despite the sensitive data filter being active, Recall captured the social security number.

Here's ads banner inside a post

Piltch’s test results highlight a serious flaw in Recall‘s ability to properly filter out personal information. While the AI search tool did manage to skip over some web pages containing similar sensitive data, it failed in key instances, suggesting that the feature cannot be fully trusted to safeguard privacy. These findings are particularly troubling because Recall is supposed to be a secure tool, designed to help users find relevant information without risking the exposure of sensitive data. The fact that this personal information was captured without the user’s consent raises significant privacy concerns.

The Role of AI in Managing Privacy: Microsoft’s Reassurances

Despite the backlash, Microsoft has been quick to defend the Recall feature. The company responded to the findings published by Tom’s Hardware with a statement, clarifying that they have made updates to the tool to detect sensitive data like credit card numbers, passwords, and social security numbers. According to Microsoft, when such data is detected, Recall is programmed not to save or store the screenshots, which should, in theory, prevent it from being stored in a way that would compromise user privacy.

Here's ads banner inside a post

Microsoft further explained that it will continue to refine the tool’s functionality and encouraged users to report instances where sensitive information is not properly filtered. The company has also included an option in the settings that allows users to anonymously share feedback about the apps and websites they wish to be excluded from Recall’s search. The feedback would help Microsoft improve the product, particularly in terms of privacy and security.

While these assurances may seem reassuring, they do little to address the fundamental issue that Recall failed to filter out sensitive data in a real-world scenario. If the feature cannot reliably protect personal information, it risks exposing users to potential data breaches, even with privacy settings turned on. For many users, the thought of their credit card numbers or social security information being captured in a screenshot—even if it’s not supposed to be stored—is a serious breach of trust.

The Unintended Consequences of AI-Driven Convenience

The Recall feature represents the growing trend of integrating AI into everyday tasks, making it easier for users to retrieve past information with minimal effort. On the surface, Recall seems like an incredibly helpful tool, particularly for those who juggle multiple tasks and need to find information quickly. However, as we’ve seen with this latest test, there are unintended consequences when it comes to the management of personal data.

AI-driven tools like Recall can be incredibly powerful, but they also pose a risk if not properly designed to safeguard sensitive information. Microsoft’s decision to roll out Recall as a feature that automatically takes screenshots of users’ activities and stores that data could be seen as a step too far in the name of convenience. In a world where digital privacy is an increasingly important issue, users are understandably wary of tools that may unintentionally expose their personal data.

This test underscores the ongoing tension between technological advancements and privacy protection. While the desire for faster, smarter tools is understandable, it’s equally crucial that companies like Microsoft place a strong emphasis on privacy controls and safeguards. If AI is to be fully integrated into daily life, it must be done in a way that doesn’t compromise personal security.

Privacy Concerns in the Age of AI

In the wake of this incident, the broader conversation around AI and privacy has only intensified. AI technologies are already playing a significant role in our lives, whether we’re using voice assistants like Siri or Alexa, relying on smart home devices, or simply conducting searches online. However, each of these technologies comes with its own set of privacy concerns. The Recall feature is merely the latest example of how AI can inadvertently collect and store sensitive data.

It’s worth noting that the test conducted by Tom’s Hardware is not an isolated incident. In fact, several high-profile cases in the past have illustrated how AI tools can inadvertently breach privacy boundaries. For instance, AI-powered virtual assistants have been found to listen to conversations even when the device is supposed to be off. Similarly, facial recognition software has faced backlash for being used without consent, leading to calls for stricter regulation on AI technologies.

Given the potential for privacy violations, it’s crucial that companies take a proactive approach when designing AI tools, particularly those that handle personal data. Privacy settings, like the one Microsoft has included in Recall, should be more than just optional features—they should be fundamental to the design of AI-powered products. Moreover, companies should be transparent about how data is collected, used, and stored, and they should ensure that users have full control over their information.

Moving Forward: What Needs to Change?

To regain user trust, Microsoft needs to do more than simply update the Recall feature. It’s not enough to rely on user feedback to improve the tool’s sensitivity settings. Instead, Microsoft should conduct a comprehensive review of Recall’s data handling procedures and privacy protocols. Transparency is key, and users should be fully informed about how their data is being handled, whether it’s being captured in screenshots or stored in any form.

Moreover, Microsoft should invest in improving the AI’s ability to identify and exclude sensitive data in real-time. While the tool’s current filter may prevent some instances of sensitive information from being captured, it is clear that it is not foolproof. More advanced algorithms and a better understanding of what constitutes “sensitive information” are essential to creating a reliable and secure experience for users.

Lastly, Microsoft should continue to work with independent security researchers and privacy experts to test Recall‘s functionality. Third-party testing can help identify vulnerabilities that might not be immediately apparent during internal testing. Ultimately, Recall and similar AI tools must strike a delicate balance between user convenience and data protection. Without that balance, even the most innovative technologies may fail to live up to the privacy standards that users expect and deserve.

Can Recall Be Trusted?

Microsoft’s Recall feature offers a glimpse into the future of AI-driven productivity tools, but its recent privacy failures highlight the challenges that come with such innovations. As it stands, users should approach Recall with caution, particularly if they have sensitive information stored on their devices. While Microsoft’s assurances are a step in the right direction, it’s clear that much work remains to be done to ensure that this tool, and others like it, are truly secure.

In the end, the success of AI-powered tools like Recall hinges not only on their functionality but also on the trust that users place in them. Until Microsoft can prove that it has fully addressed the privacy concerns surrounding Recall, users should be wary of relying on this tool for sensitive tasks. As AI continues to evolve, it’s vital that privacy remains a top priority in the design and rollout of every new feature.